CAPTCHAs are everywhere, and almost universally hated. Whether it's deciphering blurry text or clicking every fire hydrant in a grid, users are routinely interrupted by challenges that are hard to solve and even harder to justify. For most people, failing a CAPTCHA feels like being wrongly accused of being a bot. And for companies, that friction translates into real costs: increased user drop-off, lower conversion rates, and more support tickets.

But here’s the kicker: CAPTCHAs aren’t just frustrating, they’re increasingly ineffective from a security standpoint.

Modern bots routinely bypass them using CAPTCHA solvers (also known as CAPTCHA farms), which are tools that solve challenges at scale, often through a single API call. Many anti-detect browser frameworks now include built-in support for services like CapSolver. Attackers no longer need to write custom logic or outsource to human farms. They simply pass the challenge to an AI-based solver and move on.

We recently investigated a fake account creation campaign targeting a large gaming platform. It was a sustained attack: more than 38,000 automated signup attempts in a single month. The bots used automated Chrome instances, custom email domains, and, notably, a CAPTCHA solver browser extension. But the site wasn’t even protected by a CAPTCHA during the attack. The extension was simply part of the attacker's default stack.

This case is a useful lens into a broader trend. In this article, we’ll:

- Explain how CAPTCHA solvers work and how they’ve evolved

- Walk through the attack and how we detected it using behavioral and fingerprinting signals

- Break down the real limits of CAPTCHA in 2025, and what actually works against modern bots

If you’re still relying on CAPTCHAs to protect account creation or login endpoints, this is your wake-up call.

What CAPTCHA solvers actually do, and how they evolved

To understand why CAPTCHAs are no longer a reliable defense in 2025, it’s important to examine how attackers actually bypass them, and how those methods have evolved.

Let’s be clear: using a CAPTCHA solver isn’t the only way to break a CAPTCHA. Some sophisticated attackers build their own solvers tailored to specific challenges. They automate browsers with clean fingerprints, mimic human-like mouse movements, and apply image or audio recognition techniques to pass the challenge. But these setups are complex and often brittle.

Third-party CAPTCHA solvers, on the other hand, are more generic, easier to use, and increasingly common. That’s why we focus on them here.

CAPTCHA solvers, from human farms to AI pipelines: A CAPTCHA solver, also known as a CAPTCHA farm, is a commercial service designed to solve CAPTCHA challenges on behalf of bots. These services are typically exposed through APIs or browser extensions and are built to integrate directly into bot frameworks.

Historically, CAPTCHA solvers operated using human labor. Bots would send the CAPTCHA challenge to a low-cost worker, usually in a farm-style setup, and wait for a human to respond. This method worked, but it was slow, error-prone, and expensive to scale.

That model is increasingly obsolete.

Modern solvers like CapSolver rely instead on a combination of reverse engineering and AI-based visual or audio recognition. Instead of simulating a browser or interacting with JavaScript, they extract key parameters like the image, site key, and challenge token. The solver then uses a model to return the correct answer within a few seconds.

The technical details are intentionally opaque. But based on our analysis of solver behavior, including pricing, response time, and the lack of full browser interaction, we infer that many challenges are now solved entirely offline using pre-trained models. For more context, see our deep dive into a Binance CAPTCHA solver.

APIs built for scale: Most CAPTCHA farms expose developer-friendly APIs that let attackers offload solving with minimal effort. Here's a simplified example from 2Captcha, targeting an anonymized site:

{

"clientKey": "YOUR_API_KEY",

"task": {

"type": "CaptchaType",

"websiteURL": "<https://targetedwebsite.com>",

"captchaUrl": "<https://captchaUrl.com/captchaId>",

"userAgent": "Mozilla/5.0 (Windows NT 10.0...)",

"proxyType": "http",

"proxyAddress": "1.2.3.4",

"proxyPort": "8080",

"proxyLogin": "user23",

"proxyPassword": "p4$w0rd"

}

}

This request ensures that the CAPTCHA solver uses the same IP address and user agent as the bot. That consistency helps avoid additional bot detection triggers like impossible travel or browser fingerprint mismatches.

Browser extensions for plug-and-play automation: Some CAPTCHA farms, including CapSolver, also provide browser extensions. These can be embedded into the headless Chrome environments used by automation frameworks like Puppeteer or Playwright. For attackers, this means they don’t need to write any solving logic. They just install the extension, provide their API key, and the CAPTCHA is handled automatically in the background.

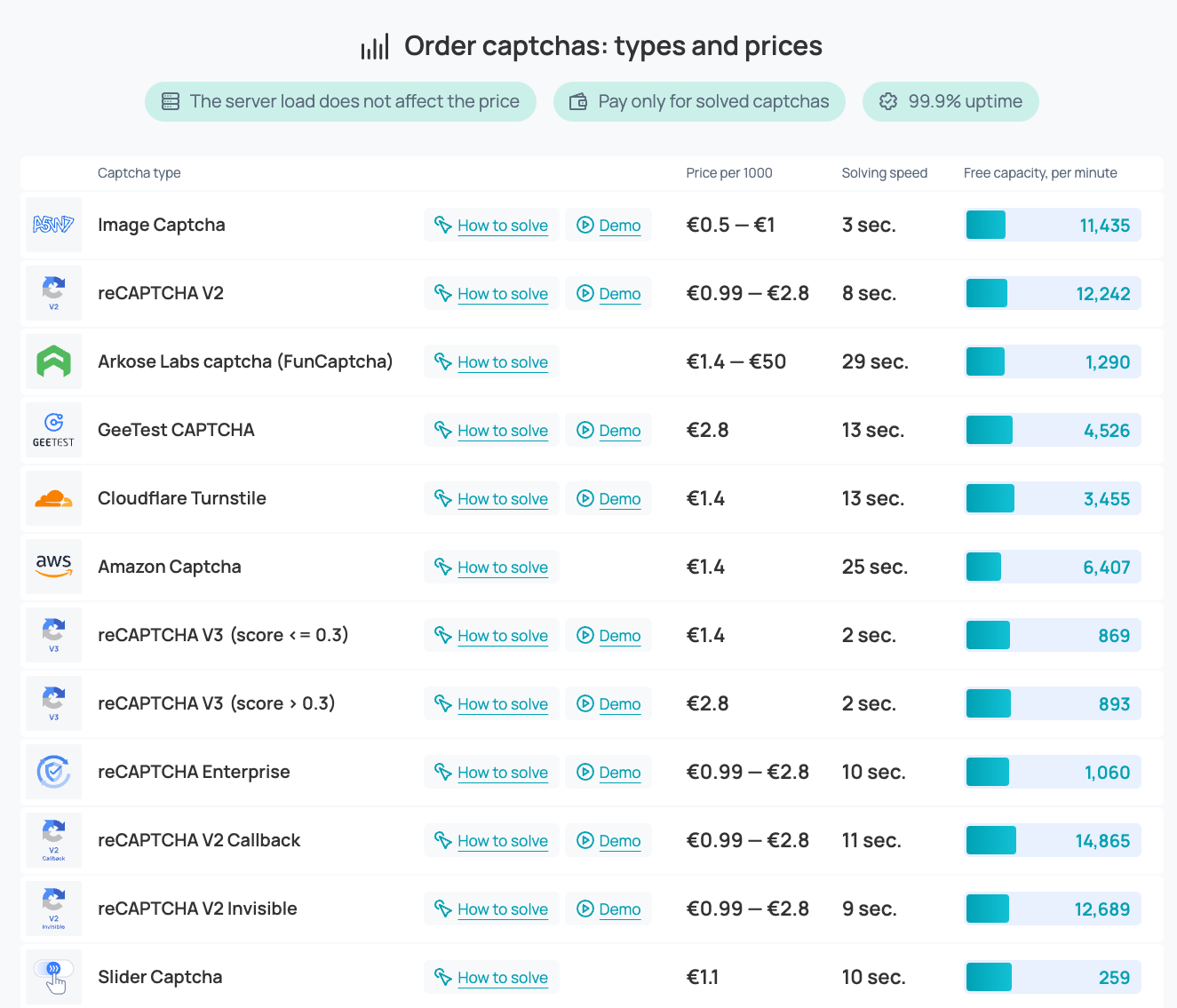

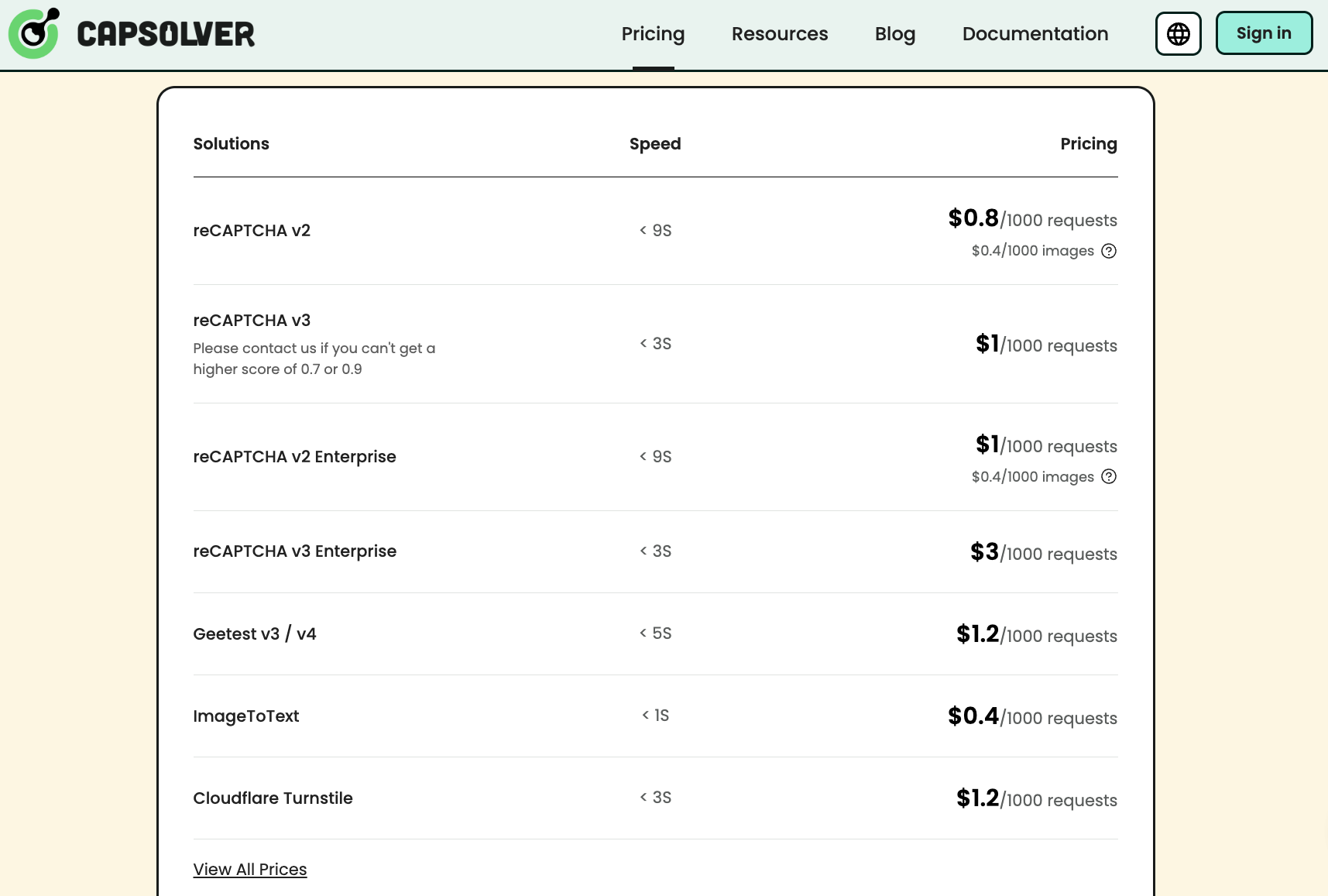

Cheap, fast, and scalable: CAPTCHA solvers are extremely affordable. At the time of writing:

- CapSolver charges $0.80 per 1,000 reCAPTCHA v2 challenges

- 2Captcha ranges from around $1.00 to $2.80, depending on challenge type and complexity

Some websites make this even easier by issuing temporary tokens or cookies once a CAPTCHA is passed. These tokens let bots skip future challenges during the same session, which means attackers might only need to solve a single CAPTCHA for dozens of automated requests.

Bottom line: Solving a CAPTCHA no longer requires a real browser, a human worker, or any meaningful effort. In many cases, it is just another API call — cheap, fast, and fully integrated into modern bot frameworks.

Case study: how an attack unfolded against a gaming platform

Before we return to CAPTCHA solvers and their use in real-world bot operations, let’s look at an actual attack we observed targeting a major gaming platform protected by Castle.

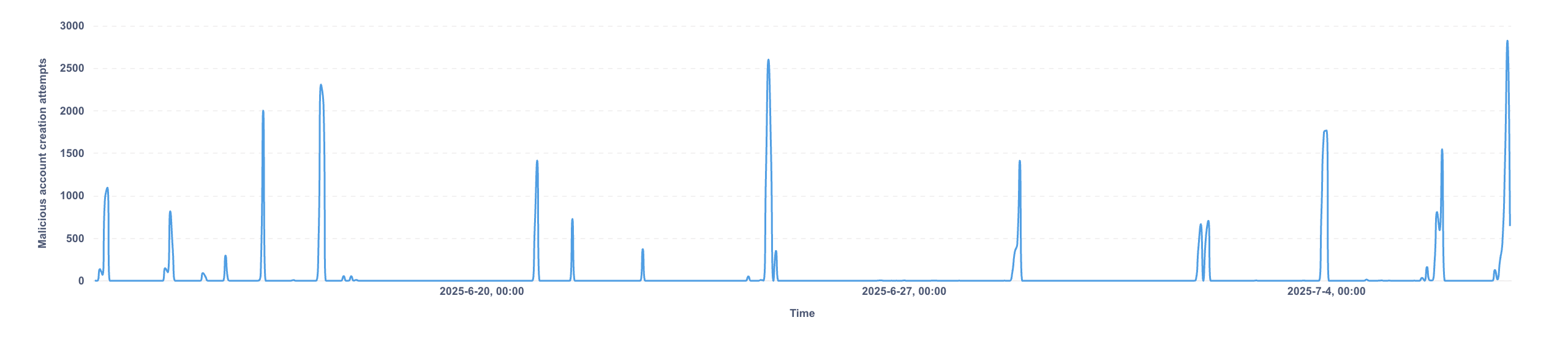

Over the span of a month, the platform was hit by a large-scale fake account creation campaign. We detected more than 38,000 malicious automated signup attempts.

The graph below shows the number of attack attempts per hour. At its peak, the botnet attempted over 2,700 account creations in a single hour:

This was not a low-effort attack. The bots showed clear signs of sophistication:

- They used automated Chrome instances but avoided standard headless indicators, such as

navigator.webdriver = trueor Chrome DevTools Protocol side effects as discussed here - They avoided known disposable email providers, instead relying on custom attacker-controlled domains that were not flagged by public blocklists

Why highlight this in an article about CAPTCHA solvers?

Because even though the site under attack did not use a CAPTCHA at the time, we detected the presence of a CAPTCHA-solving browser extension in the attacking bots. That detail is an important signal about how automation infrastructure is built and reused across targets.

How we detected the bots, and what we found: Castle blocked the attack based on multiple technical and behavioral signals:

- Email reputation: The attackers used custom domains, many of which were newly registered or displayed suspicious patterns such as typos or naming conventions that resemble disposable services. While these domains did not appear on open-source blocklists, they correlated highly with malicious behavior in our models.

- Fingerprinting inconsistencies: The user agent string claimed the bots were running on Linux, but Castle’s JavaScript-based fingerprinting revealed traits consistent with Windows. For example, the font list included fonts found on Windows systems but not on Linux. Other signals reinforced the mismatch. This kind of inconsistency is often associated with anti-detect browsers or misconfigured automation stacks. Notably, most bots today avoid claiming Linux in the user agent, as some detection engines treat it as inherently more suspicious.

- Extension fingerprinting: Our detection system identified two browser extensions in use:

- MetaMask, a common crypto wallet extension often seen in legitimate traffic

- CapSolver, a CAPTCHA solver extension typically used in automated environments

Castle does not use any APIs to detect extensions directly. Instead, our fingerprinting agent uses stealth JavaScript challenges designed to detect side effects introduced by specific extensions. These tests run silently and do not trigger console logs or visible errors. Through this method, we are able to detect the presence of extensions like CapSolver based on how they alter the browser environment.

What the presence of CapSolver tells us: While CapSolver on its own doesn’t prove malicious behavior, some users may install CAPTCHA solvers to reduce friction, in this context it was a strong signal.

More importantly, it confirms that CAPTCHA-solving services are used in real-world bot operations. Because Castle can uniquely detect extensions through side effects, we know with high confidence that the attacking bots had CapSolver installed and active across sessions.

That raises the obvious question: why was CapSolver installed at all, given that the site under attack did not use CAPTCHA?

We can’t be certain, but two plausible explanations stand out:

- Residual configuration from earlier targets: This gaming platform may have previously used a traditional CAPTCHA before switching to Castle. The attacker could have simply reused or only partially updated their automation stack, leaving CapSolver installed.

- Shared infrastructure across targets: The attacker may use the same bots across multiple sites, some of which do rely on CAPTCHAs. In that case, keeping CapSolver installed is part of maintaining a general-purpose automation setup.

Either way, the key takeaway is clear. CapSolver wasn’t installed by accident. Its presence shows that CAPTCHA-solving services are built directly into the attacker’s toolkit. This is not a theory. It is happening in the wild, at scale, today.

Why CAPTCHAs are failing in 2025

Despite being one of the most recognizable security mechanisms on the web, CAPTCHAs have become ineffective against modern bots. They are still widely deployed, often out of habit, but they no longer offer meaningful protection against determined attackers. Worse, they create real friction for legitimate users.

Let’s break down why.

Security limitations: Today’s bots are not simple scripts. They operate headlessly, simulate human behavior, and integrate tools like CapSolver or 2Captcha to solve challenges automatically. These solvers remove the need for manual logic or human interaction. CAPTCHA solving has become a commoditized service.

Browser extensions, API wrappers, and cloud-based solvers make it easy for attackers to solve thousands of CAPTCHAs for a few dollars. Many challenges can be solved without rendering the CAPTCHA or running any client-side JavaScript. The attacker just submits a few parameters and receives a valid response.

Bypassing a CAPTCHA no longer requires creativity. It just requires a credit card.

Worse still, many websites continue to treat CAPTCHAs as their primary or only defense. Once the challenge is solved, attackers can proceed with no resistance. Without additional behavioral or contextual signals, the system sees a passed CAPTCHA and assumes the user is human.

UX cost: On the user side, CAPTCHAs have always been frustrating. That frustration has only increased. Modern challenges are more frequent, harder to pass, and often inaccessible on mobile devices or for users with disabilities.

We’ve seen the impact across multiple customers. CAPTCHAs lower signup completion rates, increase abandonment, and damage trust, all while offering little protection from sophisticated automation.

Strategic takeaway: In 2025, it is clear that CAPTCHAs are no longer an effective security control. They introduce friction without stopping serious threats.

At Castle, we rely on layered detection instead. Effective bot detection depends on combining multiple weak signals to form a high-confidence decision:

- Device fingerprinting: to detect inconsistencies between claimed and observed environments, and to identify side effects from automation frameworks and anti-detect browsers

- Behavioral analysis: to flag automation based on movement patterns, navigation flows, or timing anomalies

- Reputational and contextual signals: including domain age, email history, proxy detection, user’s preferred language, timezone, and more

These signals are combined using machine learning models and logic rules. They work together to detect abuse quietly and accurately, without bothering legitimate users. Everything happens in the background, with no added friction or visual challenge.

Used properly, this kind of detection can flag bots even when they pass superficial checks like CAPTCHAs. And unlike CAPTCHAs, it does so invisibly, with no negative impact on user experience.