A recent Hacker News post looked at the reverse engineering of TikTok’s JavaScript virtual machine (VM). Many commenters assumed the VM was malicious, designed for invasive tracking or surveillance.

But based on the VM’s behavior and string patterns, a more plausible explanation is that it's designed to make automated abuse harder, especially from bots that don’t use a real browser. Like many large platforms, TikTok faces constant attacks from bots trying to create fake accounts, hijack popular profiles, or abuse APIs using lightweight HTTP clients.

To defend against this, TikTok obfuscates part of its client-side logic using a custom JavaScript VM. This VM executes code that checks for telltale signs of automation, like the presence of webdriver or canvas fingerprinting techniques that leverage the toDataURL function, both of which appear in the VM’s string output. These patterns are commonly used in fingerprinting and bot detection.

The goal is to raise the cost of bot development by forcing attackers to run full browser environments, making automation slower, more fragile, and easier to detect.

To be clear, this interpretation is based solely on the reverse-engineered code and common anti-bot techniques. I don’t have insight into TikTok’s internal decision-making, but this VM aligns with strategies widely used across the industry.

This article breaks down:

- What kinds of abuse bots perform on platforms like TikTok

- How HTTP-based bots differ from browser automation

- Why enforcing JavaScript execution is more difficult than it sounds

- How VM-based obfuscation fits into a broader anti-bot strategy

What types of bots target platforms like TikTok?

Like most large-scale websites and mobile applications, especially social media platforms, TikTok is heavily targeted by bots. Automation enables fraud at scale, and bots can be used to exploit TikTok’s infrastructure in several ways:

- Mass account creation: Attackers create large numbers of fake accounts to amplify scams, manipulate algorithms, or build inventories of accounts for resale.

- Spam and phishing: Fake accounts are used to post malicious comments or send messages that link to phishing pages or promote crypto scams.

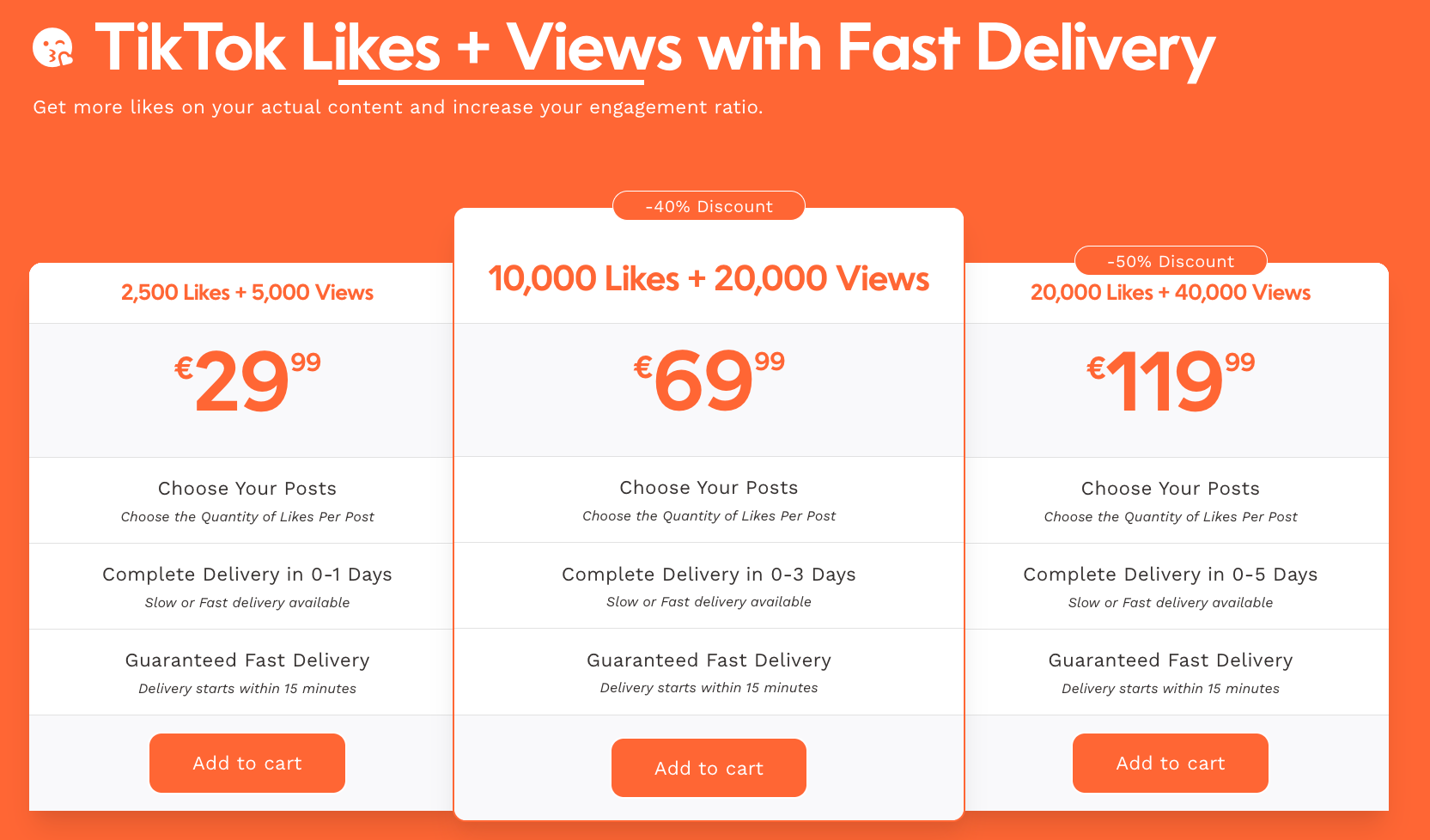

- Engagement fraud: Bots artificially inflate metrics like views, likes, or follower counts. This creates the illusion of popularity and drives real user engagement. Entire marketplaces exist to sell these services (cf screenshot below).

- Credential stuffing: Bots automate login attempts using stolen username and password pairs from past breaches. Since most users tend to reuse credentials across different websites, their accounts can be taken over. This is especially risky for high-profile users.

These are not hypothetical threats. Numerous online services allow users to buy engagement metrics like views or likes. To deliver at scale, these services rely on automated bots.

How attackers build bots

Bot developers typically choose between two approaches when building automation:

- Browser-based bots, which control a real or headless browser

- HTTP-based bots, which bypass the browser entirely and replicate sequences of API calls directly

Browser-based bots: These bots use real browsers, or headless variants, driven by automation frameworks like Puppeteer or Playwright. The scripts can simulate user actions like opening a page, clicking a button, or submitting a form, just as a human would.

This method offers nearly full access to the site’s frontend environment, including JavaScript execution, rendering, and client-side storage. Because the automation runs inside a real browser, it also benefits from built-in handling of cookies, sessions, and HTTP headers, there's no need to replicate this manually.

Modern tools like Playwright’s codegen even allow developers to record interactions in the browser and automatically generate the corresponding automation scripts. However, this convenience comes at a cost. Running full browsers at scale requires significant compute resources and orchestration. Launching and managing thousands of browser instances in parallel introduces real infrastructure overhead. Moreover, browser automation often leaves detectable traces, such as anomalies in the browser fingerprint.

HTTP-based bots: In contrast, HTTP bots skip the browser entirely. They replicate the sequence of HTTP requests that a browser would make, typically targeting the platform’s APIs directly. For example, a bot might send a POST request to the login endpoint without executing any client-side code:

fetch('https://example.com/api/login', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ username: 'user', password: 'pass' }),

});This method is extremely lightweight and cost-effective. Without needing to spin up a browser, HTTP bots can run at a much higher scale with minimal infrastructure. However, HTTP-based bots are harder to build and maintain. Bot developers must manually reverse engineer the request sequence, inspect payloads, extract dynamic headers, and manage cookies. Tools like browser devtools or proxies like Charles and Burp Suite are often used to capture this data.

The real challenge comes when websites introduce anti-bot protections. Unlike browser-based bots, HTTP bots must replicate or bypass any JavaScript logic that runs in the browser. When defenses change, the bots often break and require reverse engineering to restore functionality, a process that can be time-consuming and difficult to automate.

Most sophisticated bots don’t necessarily use real browsers

You might think it's simple to block bots that don’t leverage a real or headless browser: just require them to execute JavaScript and they will fail. However, it's not that straightforward. Verifying that an adversarial user, or a bot, has executed JavaScript is harder than it seems and is often easy for attackers to bypass.

Consider a simple example where a login request includes a signal that indicates whether JavaScript was executed:

function hasExecutedJs() {

return true;

}

fetch('/api/login', {

method: 'POST',

body: JSON.stringify({

username: 'foo',

password: 'bar',

executedJs: hasExecutedJs(),

}),

});

Clearly, an attacker can hardcode true into the executedJs field of their request to make it look like they executed JavaScript.

A natural next step is to make the hasExecutedJs function more dynamic, for example by using a hash or a simple proof-of-work (PoW):

function simpleHash(str) {

let sum = 0;

for (let i = 0; i < str.length; i++) {

sum += str.charCodeAt(i);

}

return (sum % 10000).toString().padStart(4, '0');

}

function simplePoW(seed, prefix = '00') {

let nonce = 0;

while (true) {

const h = simpleHash(seed + nonce);

if (h.startsWith(prefix)) return nonce;

nonce++;

}

}

fetch('/api/login', {

method: 'POST',

body: JSON.stringify({

username: 'foo',

password: 'bar',

executedJs: simplePoW(seed),

}),

});Ideally, the seed should be dynamic. It could be linked to the current time or derived from user-specific data such as their email address or user ID. On the server side, you could verify the result using the following function. The nonce is the output of the simplePoW function, computed in the browser and sent in the executedJs field of the payload:

function verifyPoW(seed, nonce, prefix = '00') {

const h = simpleHash(seed + nonce);

return h.startsWith(prefix);

}Of course, attackers won’t give up in the face of a basic proof-of-work challenge. They will extract the client-side code, reimplement it in their automation language of choice (such as Node.js or Python), and run it before sending the API request. This allows them to mimic JavaScript execution without ever running a real browser.

And some attackers go much further. For high-value targets, they may optimize this process to industrial scale. One example is this Cuda-based solver, which rewrites a JavaScript proof-of-work challenge in Cuda to run on the GPU. This allows bots to generate tokens offline at massive speed, sidestepping in-browser execution entirely and turning your anti-bot check into a parallelized backend task.

Obfuscation makes reverse engineering harder

To defend against HTTP-based bots, many anti-bot vendors and platforms obfuscate their JavaScript code, especially logic related to proof-of-work and browser fingerprinting.

Obfuscation is the process of transforming readable JavaScript into a form that is intentionally difficult to analyze. This makes it harder for attackers to understand how data such as mouse coordinates or fingerprinting signals is collected, processed, and sent to the server.

function hi() {

console.log("Hello World!");

}

hi();For example, the simple hi function above becomes significantly more complex once obfuscated (using obfuscator.io).

(function(c,d){var j={c:0x189,d:0x185,e:0x186,f:0x17f,g:0x181,k:0x18a,l:0x184,m:0x187,n:0x188,o:0x182,p:0x183},h=b,e=c();while(!![]){try{var f=-parseInt(h(j.c))/0x1*(parseInt(h(j.d))/0x2)+parseInt(h(j.e))/0x3+parseInt(h(j.f))/0x4+parseInt(h(j.g))/0x5+-parseInt(h(j.k))/0x6*(-parseInt(h(j.l))/0x7)+parseInt(h(j.m))/0x8*(parseInt(h(j.n))/0x9)+parseInt(h(j.o))/0xa*(-parseInt(h(j.p))/0xb);if(f===d)break;else e['push'](e['shift']());}catch(g){e['push'](e['shift']());}}}(a,0x80111));function b(c,d){var e=a();return b=function(f,g){f=f-0x17e;var h=e[f];return h;},b(c,d);}function hi(){var k={c:0x180,d:0x17e},i=b;console[i(k.c)](i(k.d));}function a(){var l=['264tMmieM','1936683wLHivm','4wxTGko','344310GJPCrh','120FuRLHD','147573baabXp','346365vSYbjQ','6yrErEF','Hello\x20World!','2176560uQrwTI','log','4812585uAEmDN','386150nXVJRu'];a=function(){return l;};return a();}hi();Tools like obfuscator.io apply techniques such as variable renaming, string encoding, and control flow flattening to make code harder to read. However, because these tools are open-source and widely adopted, they are also well understood by attackers. Projects like deobfuscate.io exist specifically to reverse their transformations. Skilled reverse engineers build custom decompilers or use automated tooling to extract and reimplement the logic regardless.

VM-based obfuscation: raising the bar

VM-based obfuscation takes things a step further. Instead of transforming the original JavaScript code, it compiles it into bytecode, which is then executed by a custom JavaScript-based virtual machine embedded in the page.

In practice:

- Logic is written in JavaScript (or even in TypeScript).

- This logic is compiled to a custom bytecode, a stream of opaque instructions.

- A custom interpreter, also written in JavaScript, executes the bytecode at runtime.

From the outside, it becomes more difficult to understand what the code does without stepping through every instruction in the VM. To reverse engineer it, an attacker must:

- Understand the VM instruction set

- Extract or reimplement the interpreter

- Manually decode or emulate the bytecode

This significantly raises the effort required to replicate frontend logic in a non-browser environment, especially if the VM or bytecode is frequently updated.

While I can’t speak for TikTok with certainty, based on the signals computed inside the VM, it's very likely that TikTok uses this approach to protect the logic responsible for generating fingerprinting and bot detection signals. By embedding this logic inside a VM, TikTok makes it far more difficult for HTTP-based bots to spoof requests with valid client-side signals.

But this technique doesn't just target HTTP bots. It also complicates efforts for developers building browser-based bots. Even with access to a full browser environment, attackers must still reverse engineer the obfuscated signal computation logic in order to tamper with it or replay it reliably.

Preventing replay attacks: VM-based obfuscation is particularly effective at preventing replay attacks, where a bot captures a valid HTTP request from a real browser and reuses it later, possibly with slight modifications, to bypass detection.

If the JavaScript that generates the request parameters is protected inside a VM, and tied to dynamic inputs like timestamps or user identifiers, replaying a previously valid request becomes significantly more difficult. The attacker must execute the original obfuscated logic in a browser every time, which increases cost and reduces scalability.

VM-based obfuscation is not a silver bullet, but it reshapes the battlefield

VM-based obfuscation does not eliminate bots. Skilled attackers can still build browser-based bots that rely on full Chrome or headless variants to automate logins, account creation, and other flows.

What it does achieve is forcing attackers to switch to tooling that is easier to detect. By making it impractical to run bot logic outside the browser, VM-based protections push attackers toward browser automation frameworks that introduce detectable artifacts.

Once bots operate inside real browsers, server-side detection becomes more effective. Defenders can apply usual bot detection techniques such as device fingerprinting and behavioral analysis.

By forcing attackers into an execution environment you control, you reduce their ability to operate at scale and increase your visibility into abusive traffic. Attackers may still attempt to reverse engineer the VM and replay valid HTTP requests. But doing so reliably requires building a dynamic and resilient decompiler. Even minor changes to the bytecode format or the VM layout can break these tools. This introduces a powerful asymmetry, defenders can rotate implementations with minimal effort, while attackers must reinvest significant time to adapt. That slows them down and increases their maintenance burden over time.

Conclusion

TikTok’s use of a virtual machine may seem unusual at first, but it is actually a common technique used to protect web applications from bots. VM-based obfuscation helps hide the logic that generates fingerprinting and anti-bot signals, so that bots cannot easily fake them.

This technique does not stop all bots. Skilled attackers can still use browser automation tools to interact with the site. But by making it harder to run bot logic outside the browser, VM-based protections increase the cost of attacks and reduce the number of tools attackers can use.

Once bots are forced to run inside real browsers, it becomes easier for defenders to detect them using fingerprinting and behavior analysis. Even if attackers reverse engineer the virtual machine, small changes to the code can break their tools and slow them down.

VM-based obfuscation is not perfect, but it is an effective way to protect key parts of a website and reduce the impact of automated abuse at scale.