CAPTCHAs are the most recognizable anti-bot mechanism on the web. Whether you're logging into a game, signing up for a new service, or checking out online, chances are you've been asked to click on traffic lights, solve a puzzle, or interpret distorted letters. These tests—Completely Automated Public Turing tests to tell Computers and Humans Apart—were designed to do exactly that: keep bots out.

But the landscape has changed.

As automation frameworks have matured and AI-driven solvers have improved, CAPTCHAs are no longer the gatekeepers they once were. They still serve a purpose, but as a standalone defense, they often fail silently, frustrating legitimate users more than stopping sophisticated attackers.

This article breaks down how CAPTCHAs work, the types you’ll encounter, and how attackers bypass them. More importantly, it explains why the “hard challenge” model no longer holds up—and what modern defenses should look like in 2025.

What is a CAPTCHA? A quick definition and why it’s losing ground

CAPTCHA stands for Completely Automated Public Turing test to tell Computers and Humans Apart. As the name suggests, it’s a challenge designed to separate human users from bots.

The original idea was straightforward: computers couldn’t solve certain visual or audio puzzles that humans could. By exploiting this asymmetry, websites could filter out automation. Tasks like reading warped text or recognizing objects in grainy photos created a barrier that real users could pass, but bots could not.

That assumption held up for years. CAPTCHAs became a fixture across login pages, sign-up forms, and comment sections.

But over time, the model began to crack.

As machine learning advanced, so did bots' ability to handle what were once uniquely human tasks. Today’s automation tools can transcribe distorted audio, classify images, and even emulate mouse movements with uncanny precision. The very premise of CAPTCHA—that a challenge can be hard for bots but easy for humans—is rapidly collapsing.

Worse, increasing the difficulty doesn’t always improve security. It just frustrates users. At some point, you’re left with puzzles that humans can solve, but no longer want to.

For regulated environments like banking, that tradeoff might be acceptable. But if you’re running a content site, marketplace, or SaaS product, users may not tolerate that kind of friction just to view a product page or read an article.

CAPTCHA technology has evolved in response. Today, challenges include:

- Image-based CAPTCHAs that ask users to click on crosswalks or traffic lights

- Slider puzzles that test coordination and behavior

- Audio CAPTCHAs for accessibility

- Math or logic questions

- Invisible CAPTCHAs like reCAPTCHA v3, which silently evaluate risk in the background

Despite their variety, these tests all stem from the same basic idea: using challenge-response friction to weed out bots. But as we’ll explore next, attackers have also evolved, and the friction often lands harder on users than on fraudsters.

Common CAPTCHA types

CAPTCHAs come in many forms, each designed to challenge bots in different ways—whether through distorted visuals, interaction-based tasks, or silent background checks. Below are the most common types, along with real-world examples that illustrate how each one works in practice.

1. Text-based CAPTCHAs

These are the original format: a series of distorted characters rendered inside an image. Users must transcribe the text correctly to pass. To make it harder for bots, designers often introduce random colors, wave effects, background noise, and overlapping shapes.

While still widely used, this format is easily defeated by simple OCR models and rarely stops anything beyond the most basic bots.

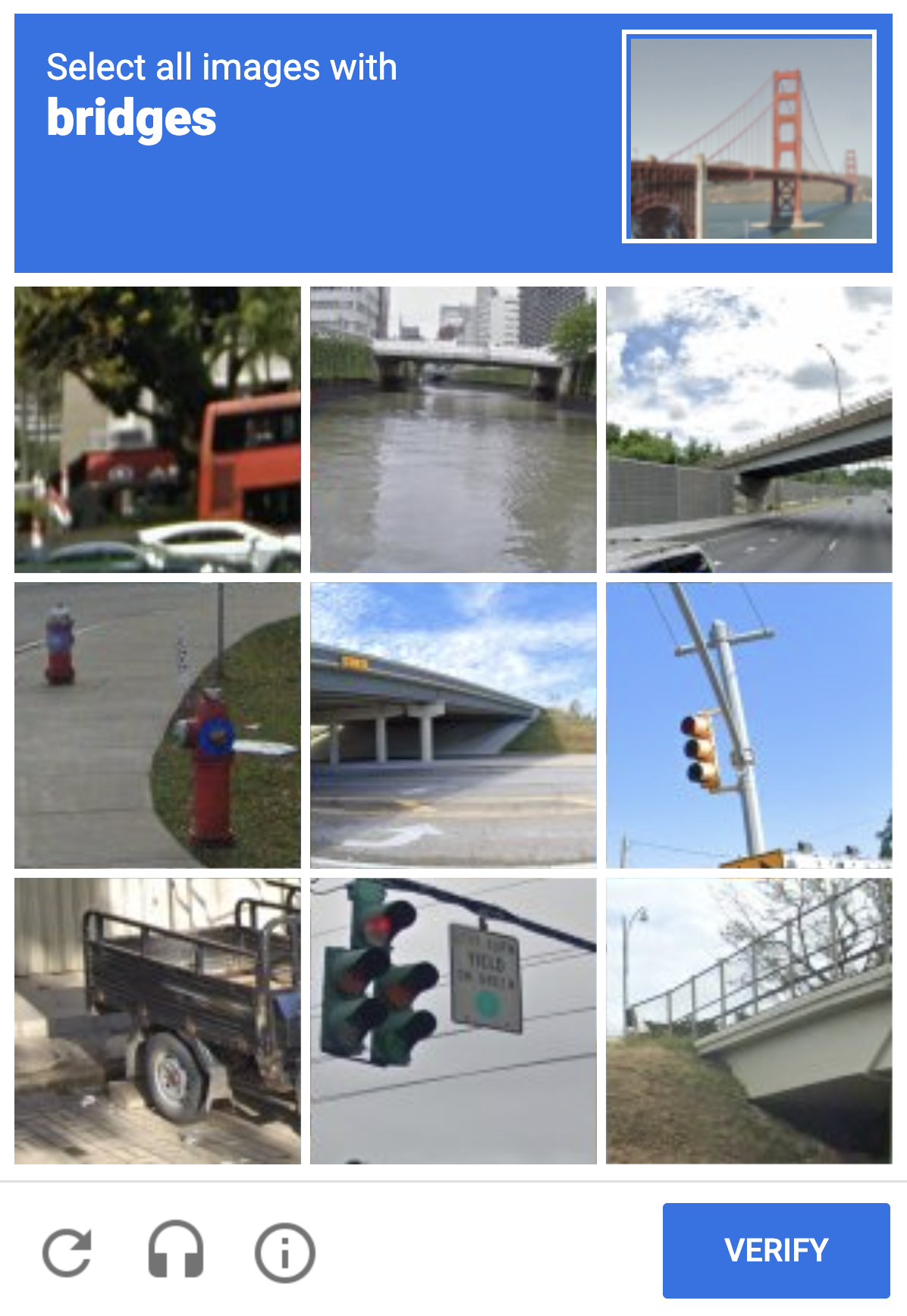

2. Image recognition CAPTCHAs

These challenges ask users to identify specific objects—like traffic lights, crosswalks, or bicycles—within a grid of images. The idea is that image classification was once too complex for bots to solve reliably.

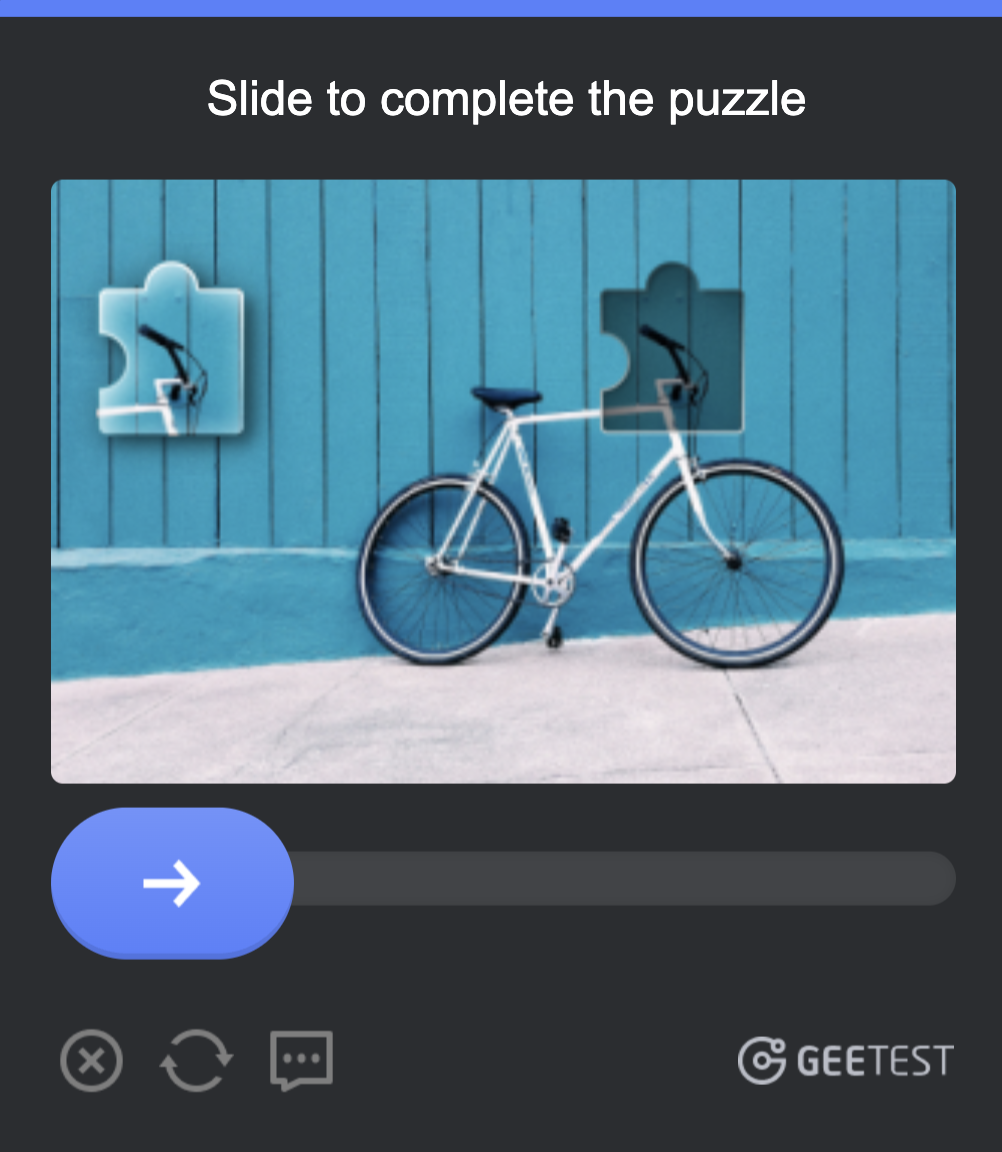

3. Slider CAPTCHAs

Slider CAPTCHAs ask users to drag a puzzle piece into place, completing an image. They often integrate behavioral analysis, tracking how you drag the piece to detect scripted movement.

Unlike simple image recognition, these CAPTCHAs rely on capturing subtle interaction signals, which makes them harder (but not impossible) to spoof.

4. Math and logic challenges

These CAPTCHAs pose simple arithmetic or logic puzzles, such as "What is 3 + 4?" or "Which shape doesn’t belong?". They're easy to complete for humans, fast to display, and often scriptable.

Some implementations here blur the line between "logic" and “just another input field,” and their security benefit is marginal.

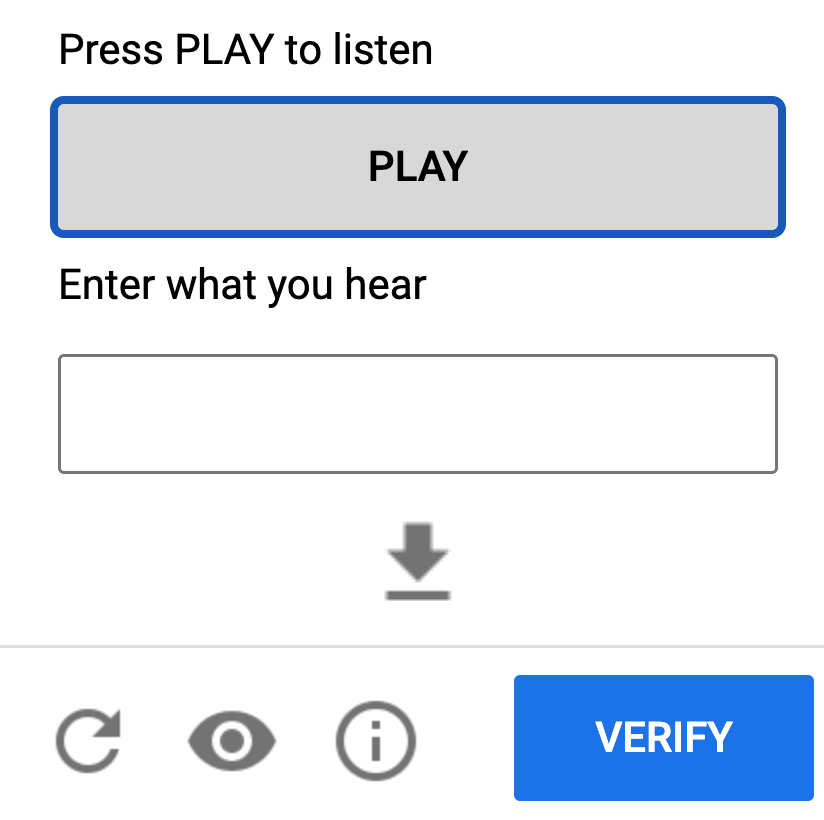

5. Audio CAPTCHAs

To accommodate visually impaired users, audio CAPTCHAs play a voice clip with distorted speech that must be transcribed. While helpful for accessibility, they're also susceptible to speech-to-text solvers and replay attacks.

The balance between accessibility and robustness is particularly delicate in this category.

6. Invisible CAPTCHAs (e.g., reCAPTCHA v3 and Turnstile)

Instead of showing a challenge, these CAPTCHAs operate in the background. They analyze behavioral and environmental signals to score the likelihood that a visitor is human. Users with a high trust score see no challenge at all.

Some CAPTCHAs, like Turnstile are technically not fully “invisible,” but its one-click design and lack of puzzles place it in the same category of low-friction user verification.

How CAPTCHAs work behind the scenes

To most users, a CAPTCHA feels like a simple hurdle: solve a puzzle, and you're in. But beneath that surface lies a more involved system, especially with modern CAPTCHA providers.

The standard challenge - response flow: Every CAPTCHA follows a basic multi-party interaction between the user’s browser, the website, and the CAPTCHA provider:

- CAPTCHA served: The website embeds a CAPTCHA widget and delivers it to the user’s browser.

- User responds: The user solves the challenge and submits the response to the website server.

- Server validates: The website forwards the response to the CAPTCHA provider (e.g., Google or Cloudflare) for verification.

- Trust token issued: If the response is valid, the website may issue a token (e.g., a cookie or session flag) to avoid repeating the CAPTCHA too soon.

This process helps ensure the CAPTCHA was actually solved by a human, not a bot, or at least, that it looked that way.

Simple challenge-based CAPTCHAs: Traditional CAPTCHAs rely entirely on the puzzle’s difficulty. These assume that bots can’t:

- Interpret distorted text

- Classify noisy images

- Emulate precise user interactions like dragging a slider

While these assumptions worked a decade ago, advances in machine learning and browser automation have made such challenges increasingly solvable, often with higher accuracy and speed than real users.

Signal-enriched CAPTCHAs: To keep up, newer CAPTCHA systems augment the puzzle with background signals that help estimate user legitimacy. These signals include:

- Behavioral cues: Mouse movements, drag trajectories, timing delays, and gesture fluidity

- Device fingerprinting: Characteristics like screen resolution, timezone, installed fonts, WebGL and canvas output

- Network-level traits: IP reputation, geolocation anomalies, and proxy detection

These inputs are used to compute a risk score. If the score is low (i.e., the user looks safe), the CAPTCHA might be hidden entirely. If the score is high, a harder or alternative challenge might be triggered.

Machine learning and adaptive difficulty: Some CAPTCHA providers go a step further, using machine learning models trained on historical traffic. This allows them to dynamically adjust friction:

- High-risk conditions (new IP, suspicious device): harder challenge

- Low-risk conditions (returning user, trusted fingerprint): skip or soften the challenge

This helps reduce unnecessary friction for legitimate users. But it also creates new attack surfaces—bots can be trained to mimic safe behaviors or operate through low-risk intermediaries.

Modern CAPTCHAs aren’t just one-off tests anymore, they're behavioral filters that blend client-side interaction with server-side scoring. That makes them smarter than before. But as we’ll see next, it still doesn’t make them effective on their own.

CAPTCHA limitations and how attackers bypass them

CAPTCHAs were designed to stop automated abuse, but in practice, they often frustrate users more than they slow down attackers. As bots get smarter and CAPTCHA solvers become commoditized, the limitations of these challenges become increasingly clear.

Poor user experience: Most CAPTCHA friction is borne by legitimate users. On mobile, small screens and touch controls make visual puzzles harder to solve. Challenges can also be cognitively fatiguing, especially when repeated across sessions or shown unnecessarily. For users with motor, visual, or cognitive impairments, the experience can be outright exclusionary.

The result? Higher bounce rates, dropped signups, and lower conversion across the board.

Accessibility shortfalls: Audio CAPTCHAs were designed to improve accessibility, but they introduce their own hurdles. Distorted clips are often difficult to parse, especially for non-native speakers or people with auditory processing issues. Ironically, the very distortions that were meant to stop bots now undermine usability, while offering little protection in return.

Modern speech-to-text APIs can solve these challenges with minimal effort.

Off-the-shelf automation: Attackers no longer need to reverse-engineer CAPTCHA logic—they can simply plug in a solution:

- Solver APIs like 2Captcha or Anti-Captcha offer real-time challenge solving via simple integrations.

- Bot frameworks like Puppeteer and Playwright come with plugins and code samples for CAPTCHA handling.

- ML-powered solvers can now break visual and audio CAPTCHAs using image classification and speech recognition.

- Human farms provide real-time labor to solve challenges for a fraction of a cent per CAPTCHA.

Many of these methods are fully automated. Bots can solve and submit challenges with no visible delay.

Replay and token abuse: Most CAPTCHA systems issue a signed token after completion. In theory, this token proves that a human solved the challenge. In practice, attackers can:

- Solve a CAPTCHA once (manually or via API)

- Extract the token

- Reuse it across multiple sessions or workflows

This turns a one-time friction point into a scalable bypass strategy, especially for credential stuffing or fake account creation.

Ultimately, CAPTCHAs are still effective against the lowest-effort bots. But for anything more sophisticated, they create more friction for real users than for attackers.

Better defenses in 2025

CAPTCHAs may still play a role, but they’re no longer enough. Today’s bots have a near-perfect fingerprint and behavior. They operate using automation frameworks, CAPTCHA solvers, and anti-detect browsers to blend in. Blocking them requires a layered defense strategy that doesn’t rely on a single gate, especially not one they can outsource to a solver.

Key components of a modern bot defense stack

1. Device fingerprinting

Fingerprinting builds a unique profile of each visitor’s device and browser using properties like screen resolution, WebGL output, canvas behavior, and audio stack APIs. This can help identify:

- Headless Chrome or other automation tools

- Spoofed or virtualized environments

- Rapid rotations between browser identities

Example: A browser claiming to be Safari but rendering a canvas fingerprint like Chrome may signal an anti-detect setup.

2. Behavioral analytics

Bots tend to behave too fast, too uniformly, or too perfectly. Real users hesitate, scroll unevenly, and don’t interact in predictable loops. Tracking patterns like mouse movement, typing cadence, and click behavior helps surface non-human sessions.

Pair this with behavioral-based velocity controls: look for patterns like repeated interactions with login or signup endpoints across multiple devices or geographies.

3. Rate-limiting and velocity checks

Even stealthy bots often need scale to be effective. Monitoring request volumes, endpoint hit rates, or action repetition by device or IP helps catch:

- Credential stuffing attempts

- Fake account creation

- Inventory hoarding or scraping

You can also tighten controls around known high-abuse entry points—like requiring 2FA after repeated login failures, or blocking disposable emails at signup.

4. Risk-based authentication

Not all sessions deserve the same friction. By evaluating signals like device risk, behavior consistency, and geo anomalies, you can escalate only when it matters:

- Known user on known device? Let them through.

- New device, proxy IP, odd click patterns? Step up with 2FA, CAPTCHA, or phone verification.

This keeps UX smooth for good users while applying pressure only when suspicion is high.

5. Multi-signal scoring

Instead of reacting to any single indicator, assign weights to multiple signals—device fingerprint, behavioral profile, IP trust level—and compute a risk score. Based on that score, dynamically respond with:

- Seamless access

- Step-up challenges (e.g., CAPTCHA, SMS verification)

- Hard blocks or silent drops

This type of adaptive defense is harder to game, because it doesn’t rely on fixed thresholds or visible triggers.

In 2025, bot detection isn’t about finding a better puzzle. It’s about understanding behavior, context, and risk. Done right, good users glide through unnoticed—while bots struggle to make it past the first step.

Conclusion

CAPTCHAs still have a role to play—but they’re no longer the foundation of effective bot defense. On their own, they create friction for users, not just fraudsters. And as automation gets smarter, even the hardest puzzles are routinely solved or outsourced.

Modern threats demand modern defenses. That means shifting from standalone tests to contextual risk assessment—using signals like device fingerprinting, behavioral patterns, and traffic velocity to make smarter decisions in real time.

The key is balance: friction only when needed, invisibility when possible.

Bottom line: Use CAPTCHAs where they add value, as a backup or secondary control. But don’t rely on them as your first line of defense. In 2025, stopping bots is less about puzzles, and more about understanding intent.