The good old days where bots used PhantomJS and could be detected because they didn’t support basic JavaScript features are over. It’s 2025, and the bots have never been as sophisticated as today. They leverage anti-detect automation frameworks, residential proxies and CAPTCHA farms. Even basic bots that leverage unmodified headless Chrome have realistic fingerprints, so what does it take to catch bad bots in 2025?

When it comes to bot detection, we distinguish 4 main categories of signals:

- Device fingerprint

- Behavior

- Reputations

- Context

In the next sections of this article, we present these different types of signals and how they can be used for bot detection.

1. Fingerprinting signals

Fingerprinting, also known as browser fingerprinting or device fingerprinting consists in combining attributes linked to the user machine. The combination of these attributes is often unique, hence the analogy with a fingerprint.

Fingerprinting signals can be gathered both on the client side and the server side:

- Client-side fingerprinting: JavaScript can collect browser attributes such as installed fonts, screen resolution, and device capabilities.

- Server-side fingerprinting: Attributes like HTTP headers, TLS fingerprints, and TCP connection patterns can help distinguish automated requests from legitimate human traffic.

Fingerprints can be used in various ways.

Track bots: Fingerprints can be used as a signature to track and block attackers, even if they delete their session cookies. However, fingerprinting for bot detection is not only about uniqueness and tracking. A significant fraction of fingerprinting attributes are specially crafted challenges that aim to detect the presence of automation frameworks and headless browsers linked to bots.

Specially crafted bot challenges: For example, the JavaScript challenge below aims to detect whether or not a browser is automated using the Chrome Devtools Protocol (CDP), a protocol used by the most popular bot frameworks like Puppeteer, Playwright and Selenium.

var detected = false;

var e = new Error();

Object.defineProperty(e, 'stack', {

get() {

detected = true;

}

});

console.log(e);This JavaScript detection technique leverages the fact that the automated browser and automation frameworks like Puppeteer, Playwright and Selenium need to serialize data when they communicate with WebSocket.

If the value of detected is equal to true then it means that the browser is automated. One of the side effects of this detection technique is that it will flag human users with dev tools open as bots. Note that the console.log(e) is part of the challenge since it is what triggers the serialisation in CDP.

Some signals are simpler. For example, the presence of navigator.webdriver = true indicates that a browser is instrumented. However, most bots remove its presence using the '--disable-blink-features=AutomationControlled' chrome flag.

Detecting forged and modified fingerprints: In general, when it comes to bot detection and fraud detection, it’s important to keep in mind that attackers frequently try to hide their real nature by modifying their fingerprint. They may try to erase attributes directly linked to bot activity, such as navigator.webdriver or to change certain characteristics of their device, like the fact that the browser is running on a Linux server, to appear more human.

Thus, most device fingerprinting scripts used in a bot detection context aim to collect as many signals as possible related to the user browser and its version, for example by testing the presence of certain recent features. They also leverage signals correlated with the user OS such as the list of fonts, canvas fingerprinting and information about the GPU. They can correlate the values of these attributes to ensure the user is not lying about the real identity of its browser and device.

Detecting fingerprint inconsistencies: example

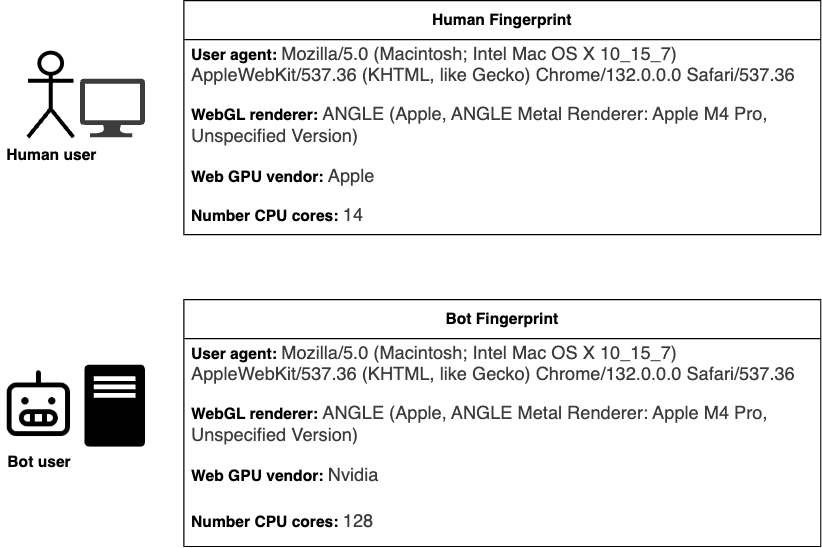

The schema below shows a simple example with a human user and a bot user. For each of them, we collect information about the user agent, the webGL renderer, the web GPU vendor and the number of CPU cores.

The human user claims to be on MacOS with Chrome. All their fingerprinting attributes are consistent with this claim:

- Their webGL renderer (linked to the GPU) indicated Apple M4 Pro

- The web GPU API also indicates that the GPU vendor is Apple

- The number of CPU cores is 14, which is consistent with a Mac laptop.

On the contrary, while the bot user also claims to be on MacOS with Chrome, we detect a few inconsistencies:

- While the webGL renderer value indicates Apple M4 Pro, the Web GPU vendor indicates Nvidia. These kinds of inconsistencies may arise when attackers lie about certain fingerprinting signals but fail to properly update all other related attributes.

- The number of CPU cores, which can be collected using

navigator.hardwareConcurrency

2. Behavioral signals

While device fingerprinting enables bot detection engines to verify the authenticity of the user’s browser and device, it’s also important to collect signals related to the user behavior. Indeed, bots are programmed to automate interactions and their behavior often differs from that of real users.

Behavioral analysis looks at how users interact with a website or application. Behavioral signals can be collected both on the client-side (in the browser or in a mobile application) and on the server side.

Client-side behavioral analysis

These are signals related to the way the user is interacting with the website. This involves signals, such as:

- Mouse movements, keystrokes, and scrolling behavior on computers;

- Touch events and swipe patterns on mobile devices.

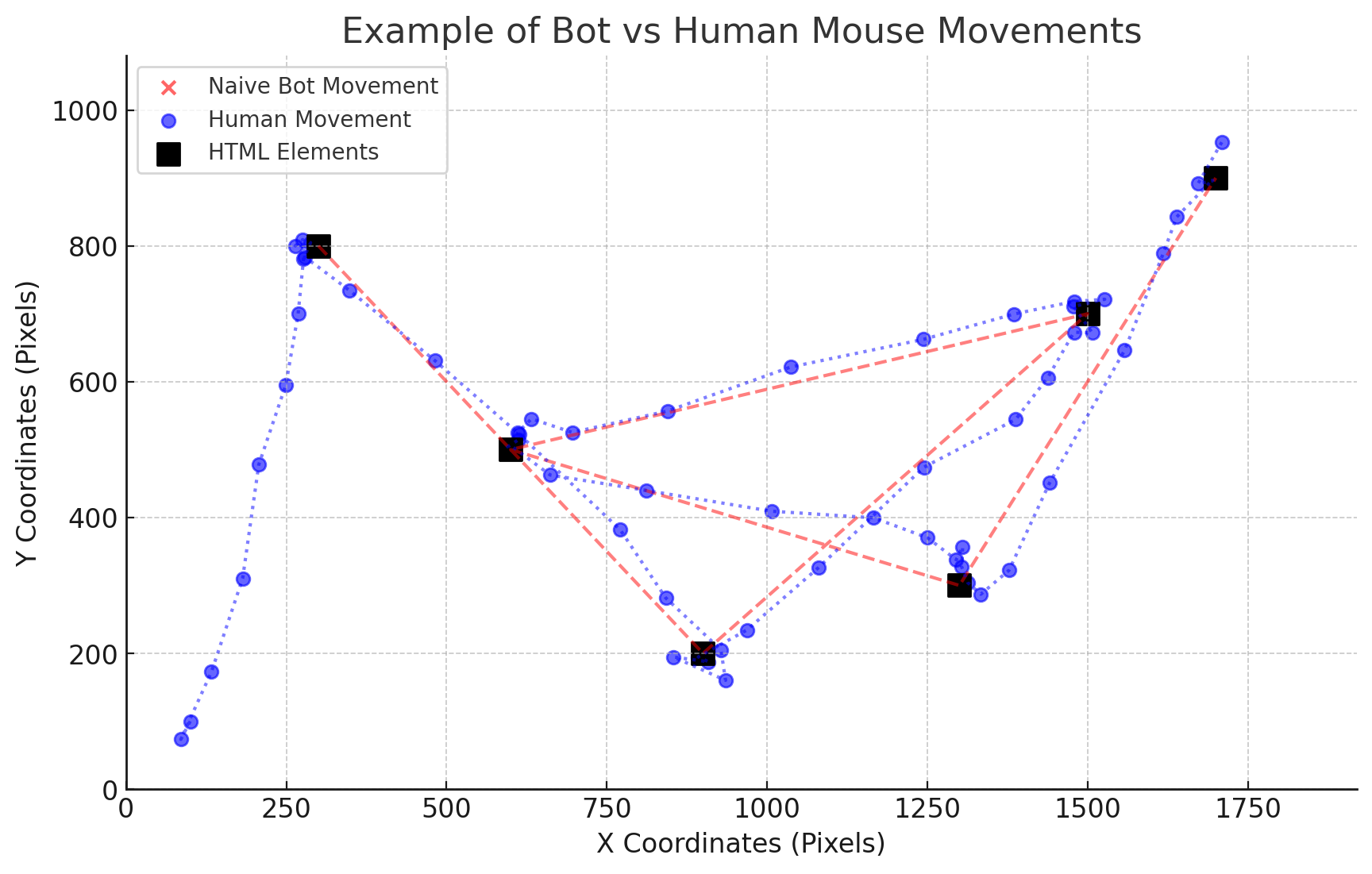

We can leverage the fact that most bots behave differently than human users. For example, bots tend to exhibit linear movement instead of natural, erratic mouse movements. The graph below shows an example of bot (red) vs human (blue) mouse movements. The black squares represents important HTML elements like buttons or links.

We see that:

- The bot tends to teleport its mouse between the different HTML elements, while the human user has a more erratic set of movements between them;

- The bot tends to perfectly click/move the mouse at the center of the HTML elements, because he retrieves their coordinates programmatically, while human users have a less perfect behavior.

Server-side behavioral analysis

We can analyze the user’s behavior based on signal collected on the server-side, such as:

- Time series of requests (e.g., frequency and intervals)

- Browsing patterns, such as accessing pages too quickly or in an unnatural sequence (adding a product to cart without viewing a product pages)

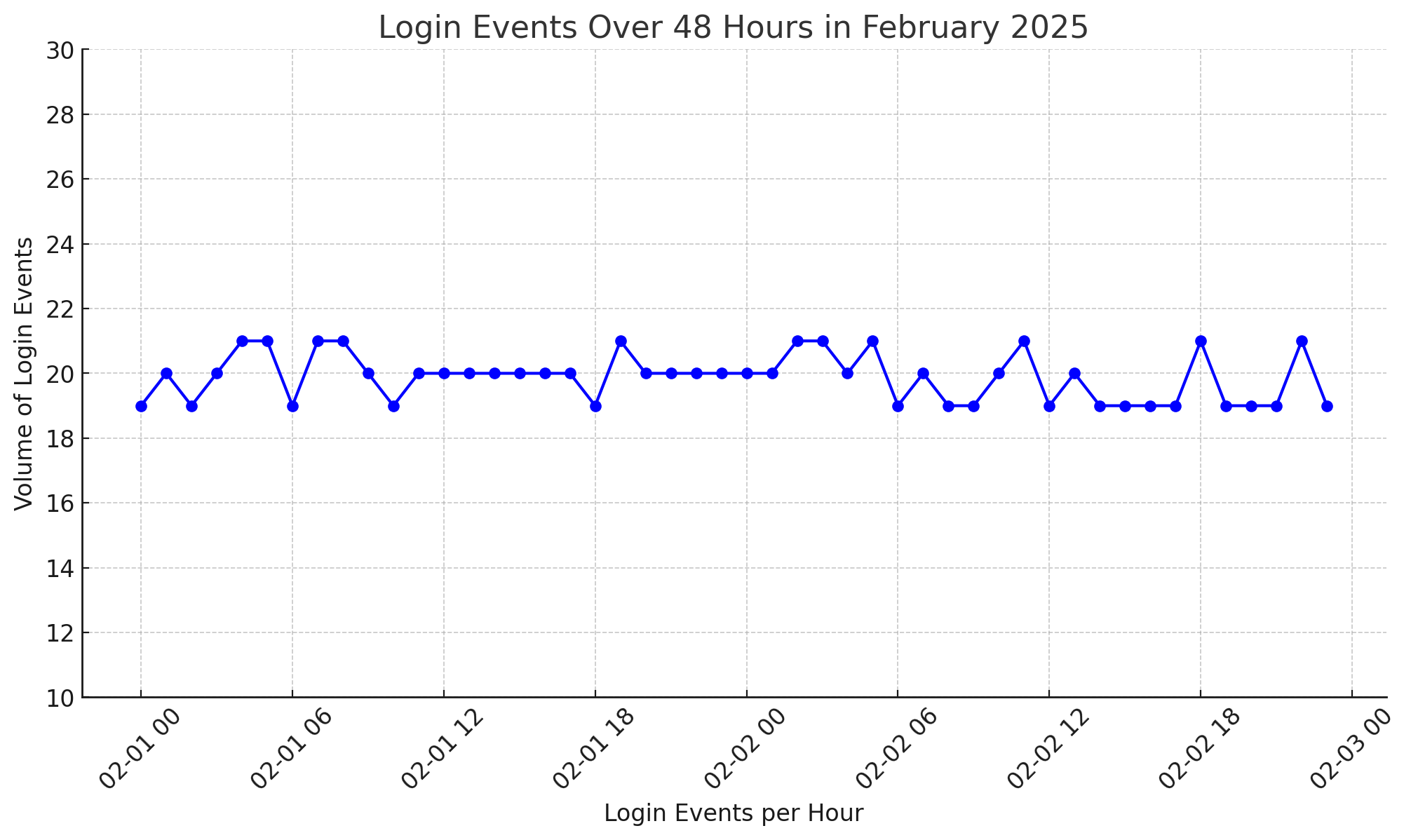

As an example, the graph below shows a fictional time series that illustrates a low and slow attack conducted from a single IP address. It represents the number of login events per hour over time. We see that even though the volume of traffic is not extremely high, the pattern is suspicious:

- There is a constant stream of login events, with no interruption during the night;

- The volume of login events per hour is almost always the same, i.e. the variance/standard deviation of the number of request is almost zero

Raw behavioral signals can be difficult to handle manually or through rules. It often requires statistical analysis or machine learning (AI). Deviations from typical human interaction patterns can indicate bot activity. Similarly, if a user is making requests at the exact same frequency, this can indicate a bot activity.

3. Reputational signals

Bot detection engines compute different reputations linked to attributes related to the user, such as its IP, its session cookie identifier, or its email. Reputation-based detection relies on analyzing historical data and known bad actors to predict whether an entity (IP, session) is a bot.

IP reputation: The most popular type of reputation is the IP address reputation. It consists in computing a score that tells how likely an IP is used by a bot. Thus, if an IP address is frequently used by bots, you can decrease the IP address reputation and treat it more carefully. Detection engine can compute this type of reputation with their own data or using external IP reputation services.

Note that the IP reputation doesn’t necessarily have to be at the granularity of a single IP address. Indeed, most bot detection services tend to be more aggressive towards autonomous systems (AS) linked to data center IPs since they’re often used by bots.

Proxy detection: A subfield of IP address reputation is proxy detection. A proxy is a program that enables users to change their IP address by routing traffic through someone else's infrastructure. Thus, bots and fraudsters often use proxies to 1) hide their real IP address and protect their identity and 2) distribute their attacks across thousands of different IP addresses to make it look like the traffic comes from different devices. It helps them to bypass traditional bot detection techniques, such as geoblocking and IP address rate limiting.

Thus, detecting proxies and being able to know if a request originates from a proxy is key to improve the quality of a bot detection engine.

User reputation: Detection engines can maintain a user reputation based on the previous user activity. The reputation can be impacted positively or negatively depending on the age of the account, the activity pattern, and whether or not some previous bot activity was observed with the user.

Reputation based are often not enough to fully identify bot activity. However, they allow security systems to act on known threats even before any behavioral or fingerprinting anomalies are detected, based on previous activity or external knowledge.

4. Contextual signals and weak signals correlation

Bots often attempt to blend in, but inconsistencies in contextual attributes and unusual combinations of signals, can reveal automated activity. By cross-referencing multiple weak signals, security systems can identify suspicious patterns.

Time context: Accessing a website at unusual hours e.g., an e-commerce site receiving high traffic at 3 AM, may be more suspicious that during day time.

Geolocation context: Detection engines can correlate information related to the user location, the user languages and timezone to ensure they are all consistent. While a mismatch between these attributes doesn’t necessarily indicate the presence of a bot — it can happen for people that traveling — this is still unusual and should be taken into account by the detection engine to adjust its decision.

Contextual signals can’t be used in isolation to detect bots. While they may indicate that some activity or user configuration is unusual, it’s often not enough to take a reliable decision. It needs to be combined with other types of signals.

Why a multi-layered approach is essential

There is no silver bullet when it comes to bot detection. Modern bots use advanced techniques such as headless browsers, CAPTCHA solving services, and fake human interactions to evade detection. They have different libraries and online services at their disposable to avoid being detected.

Thus, relying on just one type of signal is insufficient. Instead, effective bot detection requires a multi-layered approach, combining fingerprinting, behavioral, reputational, and contextual signals to create a more robust defense.

Moreover, it’s often challenging to take a decision using a single attribute or category of signals. Indeed, most of the sophisticated bots lie about known JS signals like webdriver = true. You often need to combine different signals related to the fingerprint, the user behavior and proxy detection using machine learning to detect abnormal patterns.

Real world bot detection engines, like the one we build Castle, often combine different approaches, e.g. ML-based outlier detection on device fingerprint. Using advanced machine learning detection enables to:

- React more quickly to ever-evolving attackers;

- Combine thousands of data points, fingerprinting signals and contextual information;

- Properly handle edge cases and the diversity of users on the web, to have an optimal detection that doesn’t impact user experience.

Conclusion

As sophisticated bots continue to evolve, the battle between defenders and attackers remains dynamic. A multi-layered approach combining fingerprinting, behavioral analysis, reputation systems, and contextual signals represents the most effective strategy for detecting and preventing automated threats. By leveraging advanced machine learning and continuously adapting to new evasion techniques, organizations can maintain robust protection against malicious bot activity while ensuring a seamless experience for legitimate users.